Balancing Innovation and Risk: A Responsible Approach to AI Data Governance

AI is transforming business, but many companies are unaware of the compliance risks. Without governance, sensitive data can be exposed to AI tools. Learn how In Marketing We Trust built an AI compliance framework to protect data, ensure legal compliance, and enable risk-free innovation.

Over the past few weeks, I have attended multiple events and roundtable discussions on AI adoption. One theme kept surfacing: compliance, or rather, the lack of it.

Many business leaders fell into one of two camps. Some believed they were not using AI at all (hint: they probably were). Others had AI adoption scattered across their organisation, with no central oversight. Compliance teams were either unaware of AI usage or struggling to keep up with fragmented deployments.

Hearing these conversations, one thing became crystal clear: businesses are exposing themselves to massive data risks without realising it.

At In Marketing We Trust, we are in a unique position. Not only do we use AI extensively in our own business, but we also consult on, build, and implement AI solutions for our clients. This means we have had to think deeply about balancing AI-driven innovation with responsible data governance. So, how do we tackle this challenge?

Why We Have an AI Policy

Let us be clear: anything involving data is a risk. Full stop. No exceptions. Data breaches do not just result in financial penalties, they destroy reputations. This is particularly critical for agencies and service businesses that handle other organisations' data. We must treat that data with the same diligence and care as if it were our own personal information.

AI complicates this further. Many AI models retain and process inputs, potentially exposing confidential or sensitive information. If an AI tool is not properly vetted, a seemingly harmless query could lead to data leaks or regulatory violations.

This is why we have an AI Data Policy that governs how we evaluate, use, and manage AI tools. It ensures we innovate responsibly, protect data privacy, and comply with global regulations.

What Are the Risks?

The main risks associated with AI adoption fall into three categories:

1. Data Privacy & Security

- AI tools may collect, store, or even use inputs for training, leading to potential breaches of GDPR, CCPA, or APPs (Australian Privacy Principles).

- Many AI services process data in jurisdictions with weaker data protection laws.

- Users may unknowingly input confidential or personally identifiable information (PII) into AI models.

2. Regulatory & Legal Compliance

- Companies are often unaware that AI-generated content and decisions still fall under existing data laws.

- Failure to comply with regulations can result in heavy fines (e.g. GDPR violations can cost up to 4% of global revenue).

- Clients are increasingly scrutinising service providers for data compliance. Failure to meet their standards is a dealbreaker.

3. Reputational & Ethical Risks

- Mishandling AI can lead to biased outputs, intellectual property issues, and ethical concerns.

- High-profile AI failures have led to public backlash and loss of customer trust.

- A single incident can result in lasting reputational damage.

How We Are Tackling the Issue

After witnessing these challenges first-hand at industry events, I knew we needed a structured, proactive approach. Here is how we have addressed the problem:

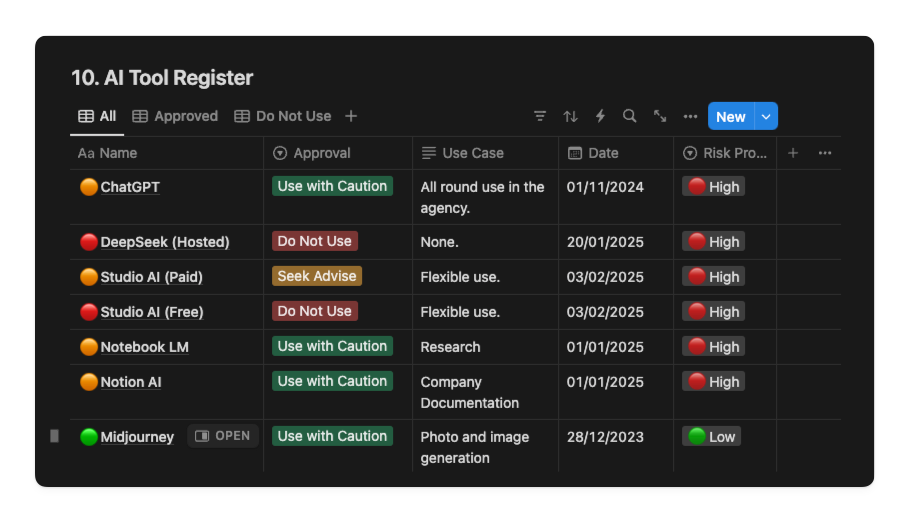

1. The AI Register

Before any AI tool is used in our business, it must be evaluated and added to our AI Register. This is a database of all approved tools, detailing:

- Compliance with major regulations (GDPR, APPs, CCPA, etc.).

- Where data is stored and processed.

- Whether the tool retains user inputs or uses them for training.

- Security measures such as encryption and access controls.

To streamline this process, we built an AI-powered compliance agent that automatically scans AI tools’ terms and conditions, evaluating them against our core data privacy criteria.

2. AI Compliance Auditor

We developed an AI Compliance Auditor to assess AI tools against global data laws. It analyses:

- Data collection, retention, and deletion policies.

- Cross-border data transfers and storage locations.

- Opt-in vs. opt-out mechanisms for AI training.

- Legal recourse and arbitration clauses.

The tool categorises AI services into three levels:

- Compliant – Safe to use with standard precautions.

- Use with Caution – Limited use cases; requires strict controls.

- Non-Compliant – Do not use under any circumstances.

3. A Dynamic, Regularly Updated Policy

Unlike most corporate policies that gather dust in a drawer, our AI policy is reviewed every eight weeks. This ensures:

- New AI tools are vetted before adoption.

- Compliance checks remain up to date with regulatory changes.

- AI risk assessments evolve alongside emerging threats.

Guiding Principles for Responsible AI Use

- Encourage Innovation Without Compromising Security

- AI should be a copilot, not a wildcard risk.

- Governance should be light-touch yet effective, avoiding unnecessary red tape while ensuring compliance.

- Transparency & Accountability

- Every AI tool must be logged in our AI Register.

- Team members are accountable for ensuring compliance with the policy.

- Strict Data Privacy Standards

- No personally identifiable information (PII) should be entered into hosted AI models. Our data and engineering team can operate to different standards with privately hosted models.

- We prioritise tools that allow opt-outs from model training.

- Client Confidentiality is Non-Negotiable

- AI tools that use inputs for training cannot process confidential client data.

- When in doubt, we do not use the tool.

- Regular Compliance Checks

- Every AI tool in use must pass an AI Compliance Audit before approval.

- Compliance is reviewed every eight weeks.

AI Compliance Must Be a Business Imperative, Not an Afterthought

AI presents enormous opportunities, but also significant risks, especially around data governance. Businesses cannot afford a laissez-faire approach to AI compliance.

By implementing an AI Register, Compliance Auditor, and dynamic policy reviews, we have created a governance framework that enables innovation without compromising security and privacy.

If you are adopting AI within your organisation, the time to establish clear governance is now. Data compliance is not an afterthought; it is a business-critical necessity.

Let us ensure AI is a force for good, not a compliance nightmare waiting to happen.